CDH 6.3.2 集成 spark 3.3.2

参考 https://blog.csdn.net/Mrheiiow/article/details/123007848

1.下载解压

tar -zxvf spark-3.3.2-bin-hadoop2.tgz

mv spark-3.3.2-bin-hadoop2 /opt/cloudera/parcels/CDH/lib/spark3

2.复制配置文件

将spark3/conf下的所有配置全部删除,并将集群spark的conf文件复制到此处

注意:复制的文件配置文件一定要是 yarnresourcemanager 上的

cd /opt/cloudera/parcels/CDH/lib/spark3/conf

rm -rf *

cp -r /etc/spark/conf/* ./

mv spark-env.sh spark-env

添加 hive-site.xml

cp /etc/hive/conf/hive-site.xml /opt/cloudera/parcels/CDH/lib/spark3/conf/

3.创建 spark-sql

vim /opt/cloudera/parcels/CDH/bin/spark-sql

```shell

#!/bin/bash

# Reference: http://stackoverflow.com/questions/59895/can-a-bash-script-tell-what-directory-its-stored-in

export HADOOP_CONF_DIR=/etc/hadoop/conf

export YARN_CONF_DIR=/etc/hadoop/conf

SOURCE="${BASH_SOURCE[0]}"

BIN_DIR="$( dirname "$SOURCE" )"

while [ -h "$SOURCE" ]

do

SOURCE="$(readlink "$SOURCE")"

[[ $SOURCE != /* ]] && SOURCE="$BIN_DIR/$SOURCE"

BIN_DIR="$( cd -P "$( dirname "$SOURCE" )" && pwd )"

done

BIN_DIR="$( cd -P "$( dirname "$SOURCE" )" && pwd )"

LIB_DIR=$BIN_DIR/../lib

export HADOOP_HOME=$LIB_DIR/hadoop

# Autodetect JAVA_HOME if not defined

. $LIB_DIR/bigtop-utils/bigtop-detect-javahome

exec $LIB_DIR/spark3/bin/spark-submit --class org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver "$@"

赋权

chmod +x /opt/cloudera/parcels/CDH/bin/spark-sql

配置快捷方式

alternatives --install /usr/bin/spark-sql spark-sql /opt/cloudera/parcels/CDH/bin/spark-sql 1

执行 sudo -uhive spark-sql 报错

com.cloudera.spark.lineage.NavigatorAppListener

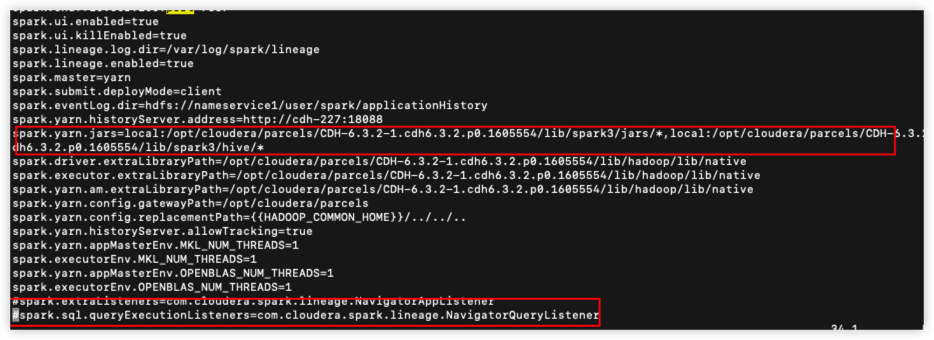

vim /opt/cloudera/parcels/CDH/lib/spark3/conf/spark-defaults.conf

生产环境配置,

修改 spark.yarn.jars hdfs:///spark-yarn/jars/*.jar

注释掉最后两行,将 spark 的 jars 上传到 hdfs 上

sudo -uhdfs hdfs dfs -mkdir -p /spark-yarn/jars/

sudo -uhdfs hdfs dfs -put * /spark-yarn/jars/

sudo -uhdfs hdfs dfs -chmod -R 777 /spark-yarn/jars/

kyuubi 集群版本

https://kyuubi.apache.org/releases.html 下载版本 1.7.1

conf/kyuubi-default.conf

kyuubi.authentication NONE

kyuubi.engine.share.level connection

kyuubi.frontend.thrift.binary.bind.port 10009

kyuubi.ha.addresses 10.0.0.227:2181,10.0.0.228:2181,10.0.0.229:2181

kyuubi.ha.client.class org.apache.kyuubi.ha.client.zookeeper.ZookeeperDiscoveryClient

kyuubi.ha.namespace kyuubi

kyuubi.ha.zookeeper.quorum 10.0.0.227:2181,10.0.0.228:2181,10.0.0.229:2181

kyuubi.ha.zookeeper.session.timeout 600000

kyuubi.session.engine.initialize.timeout 300000

spark.executor.memory 1g

spark.master yarn

#spark.sql.catalog.spark_catalog org.apache.spark.sql.hudi.catalog.HoodieCatalog

#spark.sql.extensions org.apache.spark.sql.hudi.HoodieSparkSessionExtension

spark.submit.deployMode cluster

spark.yarn.driver.memory 1g

spark.yarn.queue default

conf/kyuubi-env.sh

export JAVA_HOME=/usr/java/jdk1.8.0_181-cloudera

export SPARK_HOME=/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/spark3

export HIVE_HOME=/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/hive

export HADOOP_CONF_DIR=/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/hadoop/etc/hadoop

export YARN_CONF_DIR=/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/hadoop-yarn/etc/hadoop

每一台 kyuubi 配置都是一样的,分别启动即可。

注意:spark 的目录也要拷贝过去

使用 hive 驱动 连接即可

jdbc:hive2://10.0.0.227:2181,10.0.0.228:2181,10.0.0.229:2181/;serviceDiscoveryMode=zooKeeper;zooKeeperNamespace=kyuubi